Innovative Barlow Twins Method Enhances Self-Supervised Learning

Written on

Chapter 1: Introduction to Barlow Twins

In a recent publication, Yann LeCun and his team from Facebook AI and New York University present Barlow Twins, a groundbreaking approach to self-supervised learning (SSL) within the realm of computer vision. This method demonstrates that it is possible to train highly effective image representations without relying on manual labeling, achieving results that can sometimes surpass those of traditional supervised learning.

The contemporary SSL techniques focus on developing representations that remain consistent across various distortions or data augmentations. They typically achieve this by maximizing the similarity of representations from multiple distorted versions of the same sample. However, the LeCun team highlights the persistent problem of trivial constant representations in such methods. Most existing approaches utilize different mechanisms and meticulous implementation details to prevent solutions from collapsing.

Section 1.1: The Barlow Twins Approach

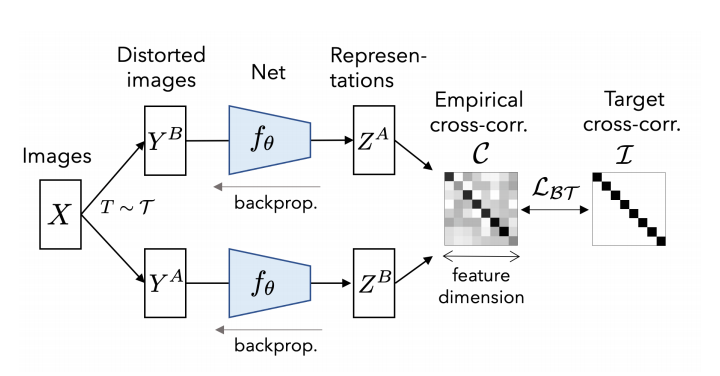

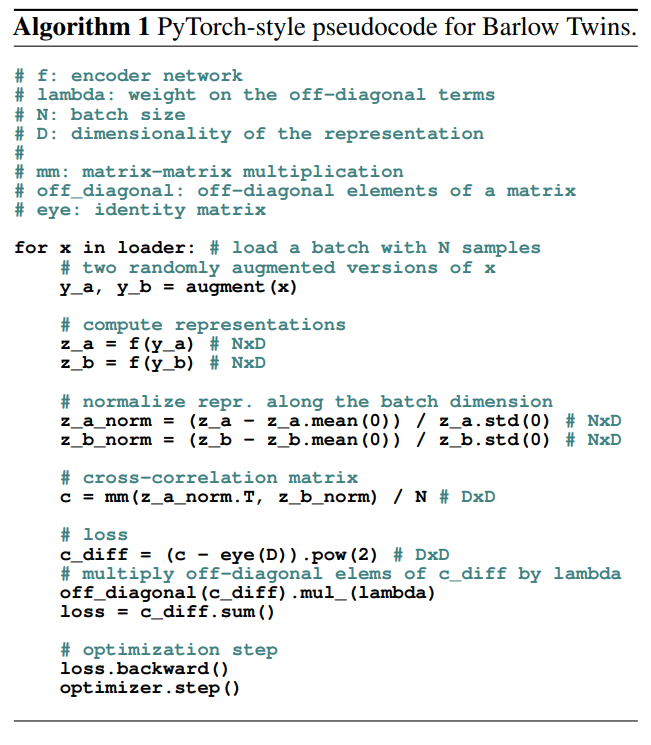

Barlow Twins introduces an innovative objective function that tackles this issue head-on. It evaluates the cross-correlation matrix of the output features from two identical networks, each processing distorted versions of the input. The goal is to make this matrix as close to the identity matrix as possible while minimizing redundancy among the vector components.

Inspired by Horace Barlow’s 1961 paper on sensory message transformation principles, the Barlow Twins method employs redundancy reduction—a concept that elucidates the organization of the visual system—within self-supervised learning.

Section 1.2: Objective Function Advantages

The objective function of Barlow Twins shares similarities with other SSL functions but introduces significant conceptual advancements that provide practical benefits over InfoNCE-based contrastive loss methods. Notably, the Barlow Twins technique does not require a vast number of negative samples, allowing it to function effectively with smaller batches and take full advantage of high-dimensional representations.

Chapter 2: Performance Evaluation

The researchers assessed the Barlow Twins representations through transfer learning on various datasets and computer vision tasks. They specifically examined its efficacy in image classification and object detection, utilizing self-supervised learning on the ImageNet ILSVRC-2012 dataset for pretraining.

The first video titled "Jure Žbontar | Barlow Twins: Self-Supervised Learning via Redundancy Reduction" provides insights into the methodology and findings of the Barlow Twins approach.

The second video, "Barlow Twins: Self-Supervised Learning via Redundancy Reduction - YouTube," explores further implications and applications of the Barlow Twins technique.

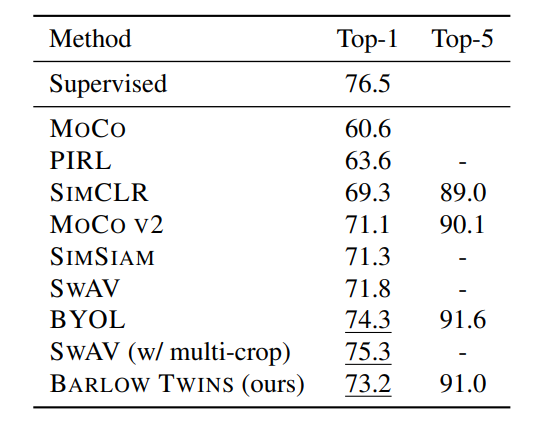

The findings reveal that Barlow Twins achieves a top-1 accuracy of 73.2% in linear evaluation on ImageNet, matching the benchmark standards. The top three self-supervised methods are highlighted.

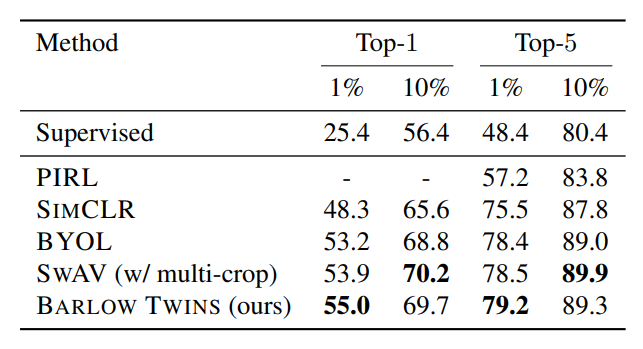

In semi-supervised learning scenarios using 1% and 10% of the training data, Barlow Twins displays comparable or superior performance against its competitors, achieving notable results.

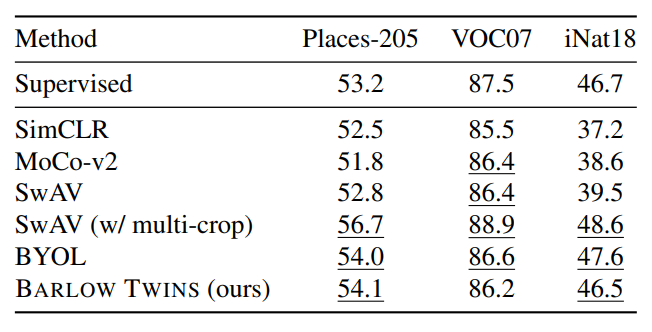

In terms of transfer learning for image classification, Barlow Twins shows robust results against prior methods, outperforming SimCLR and MoCo-v2 across all datasets.

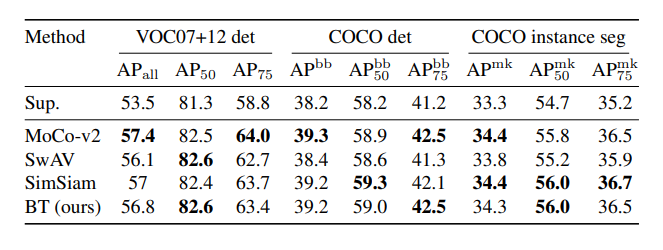

Furthermore, in object detection and instance segmentation tasks, the Barlow Twins method performs on par or better than leading representation learning techniques.

The outcomes suggest that Barlow Twins not only surpasses previous state-of-the-art methods in self-supervised learning but does so in a more conceptually straightforward manner, avoiding trivial constant representations. The researchers propose that this method represents just one potential application of the information bottleneck principle in SSL, indicating that future refinements could yield even more effective solutions.

For those interested in staying updated on the latest research and breakthroughs, consider subscribing to our popular newsletter, Synced Global AI Weekly, for weekly insights into AI advancements.