# Insights from My Experience with Prompt Engineering Courses

Written on

Understanding Prompt Engineering

Recently, I dedicated a week to exploring various prompt engineering courses focused on text-based large language models (LLMs) like ChatGPT, available on Pluralsight. My aim was to identify the unique and common elements across these classes, and I compiled my notes to share my findings.

What Is Prompt Engineering?

At its core, prompt engineering is the skill of formulating better questions to elicit more accurate responses.

Why Is Prompt Engineering Important?

Prompt engineering addresses several challenges that frequently occur when interacting with AI models, including:

- Model Bias: AI systems are trained on extensive datasets, which may contain biases.

- Unpredictability: The same prompt may produce inconsistent outputs.

- Lack of Real-World Knowledge: LLMs lack genuine world awareness, necessitating context for improved responses.

- Language and Cultural Nuances: Models may struggle with idioms and cultural references.

- Hallucinations: Models trained on inadequate data might generate confident yet unfounded responses.

The objective of mastering prompt engineering is to enhance accuracy and achieve better performance through refined control.

Crafting Effective Prompts

Developing effective prompts is often a trial-and-error process, requiring multiple iterations to achieve the desired output from an LLM like OpenAI's. Before diving into what constitutes an effective prompt, let’s first clarify what a prompt includes.

Each of the three courses I attended offered slightly different definitions of what constitutes the "anatomy of a prompt." I’ve compiled a comprehensive list of components mentioned across all classes:

Anatomy of a Prompt

- Persona/Tone: What role should the model adopt?

- Instructions: What tasks should the model perform?

- Input Content/Data: The text that needs processing.

- Format: Desired output specifications, such as “in one word” or “in one sentence.”

- Constraints: Any limitations on the response.

Types of Prompts

#### Text Summarization

When requesting a summary from ChatGPT, it's beneficial to use a delimiter like ### to distinguish where the prompt concludes and the input begins. For instance:

Summarize the key concepts from the text below

<Input text here>

This task can be tailored for specific audiences by including word limits or focusing on particular perspectives.

#### Text Extraction

You can instruct ChatGPT to extract specific information from a provided text. For example:

From the following text, extract all counties, schools, and subjects.

Desired format:

COUNTIES: [Counties]

SCHOOLS: [Schools]

SUBJECTS: [Subjects]

<Input text here>

#### Code Generation

Here are a couple of prompts for generating Python code:

# Python3

# Write code to calculate the mean distance between an array of points

"""

- Create a list of first names.

- Create a list of last names.

- Randomly combine them into a list of 10 full names.

"""

When generating code, it’s crucial to avoid extraneous details that could distract the model, ensuring precision in your requests.

#### Text Transformation

Text transformation requests can encompass various tasks, such as:

- Language translation

- Grammar and spelling corrections

- Tone adjustments (from casual to formal)

- Format conversions (e.g., text to HTML)

Evaluating Prompts

There are two primary approaches to assessing the effectiveness of prompts: objective and subjective metrics.

- Objective Metrics: These include processing speed, response time, accuracy, and relevance.

- Subjective Metrics: These focus on coherence, tone, clarity, and overall helpfulness.

Improving prompt performance may involve fine-tuning based on these evaluations.

Fine-Tuning Techniques

To mitigate repetitive outputs, you might add a directive like “but don’t repeat any sentences” to your prompt. Additionally, upgrading the model version (e.g., from GPT-3.5 to GPT-4) can enhance responses. When encountering fabricated answers, you could instruct the model to respond with “?” if it is uncertain.

An intriguing approach I discovered was asking ChatGPT to refine its own prompts. For example:

April is a skilled Python developer. Ken is an expert in crafting prompts that enhance model performance. April's role is to assist Ken in devising the most effective prompt to yield accurate and relevant responses. Write a dialogue between April and Ken discussing how to refine an initial prompt. The dialogue concludes when they agree on a final prompt.

Original Prompt: “How are black holes formed?”

Refined Prompt: “Explain the astrophysical processes that lead to the formation of stellar-mass black holes.”

Utilizing Advanced Prompt Techniques

- Zero-shot prompting: This involves a straightforward prompt without examples, relying on the model's general knowledge.

- Few-shot prompting: This technique provides a few contextual examples to enhance performance, although it consumes more tokens.

- Chain of thought prompting: This method breaks complex problems into manageable steps, encouraging thorough and careful responses.

Knowledge augmentation can also improve performance by supplying additional structured training data.

Optimizing Performance

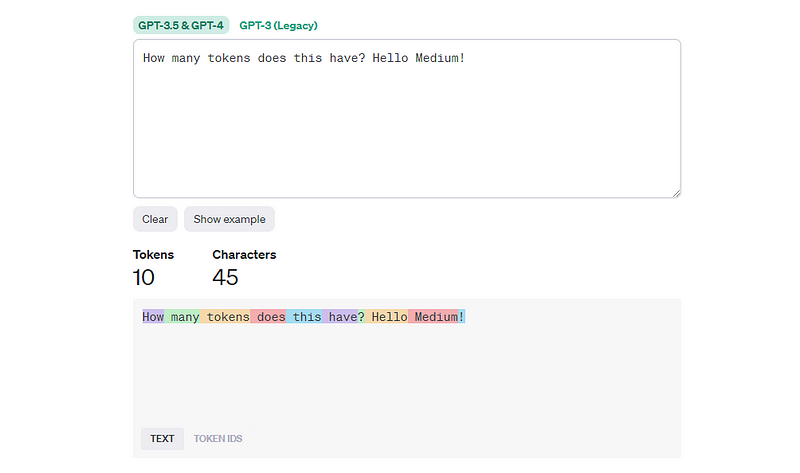

Tokens, which are sequences of characters used by OpenAI for billing, are typically about four characters long. It’s essential to understand that LLMs predict the next token rather than the next word.

The total wait time for a response from ChatGPT includes various factors:

- User-to-API latency

- Prompt token processing

- Token generation

- API-to-user latency

Other elements influencing latency include:

- The model version (newer models tend to have higher latency)

- The number of completion tokens generated

To manage latency, consider:

- Using a stop sequence in your prompt (API only)

- Setting the max_token attribute (API only)

- Specifying the n attribute for the number of responses (default is 1, API only)

- Enabling the stream attribute to receive tokens as they are generated (API only)

Ethical Considerations

Any discussion about AI education must encompass ethical considerations. Fortunately, models like ChatGPT incorporate built-in restrictions to prevent harmful or offensive content. To ensure originality and avoid copyright infringement, you may conclude your prompt with a request for all generated content to be original and free from copyrighted material.

In conclusion, prompt engineering is an emerging skill in the AI domain, and it was fascinating to learn from experienced prompt engineers. If you found this information insightful or helpful, I would appreciate your support through comments or claps.

Chapter 2: Video Insights on Prompt Engineering

In this section, we will explore insightful video content on prompt engineering.

Video Title: You Probably Don't Need Prompt Engineering!

This video discusses whether prompt engineering is necessary and offers insights into effective communication with AI models.

Video Title: Unlock a Career as a Prompt Engineer In 5 Minutes

This video provides a quick overview of how to start a career in prompt engineering, highlighting essential skills and strategies for success.